LLM Prompting Explained: How It Differs From Training

Data scientist specializing in natural language processing and AI ethics.

Data scientist specializing in natural language processing and AI ethics.

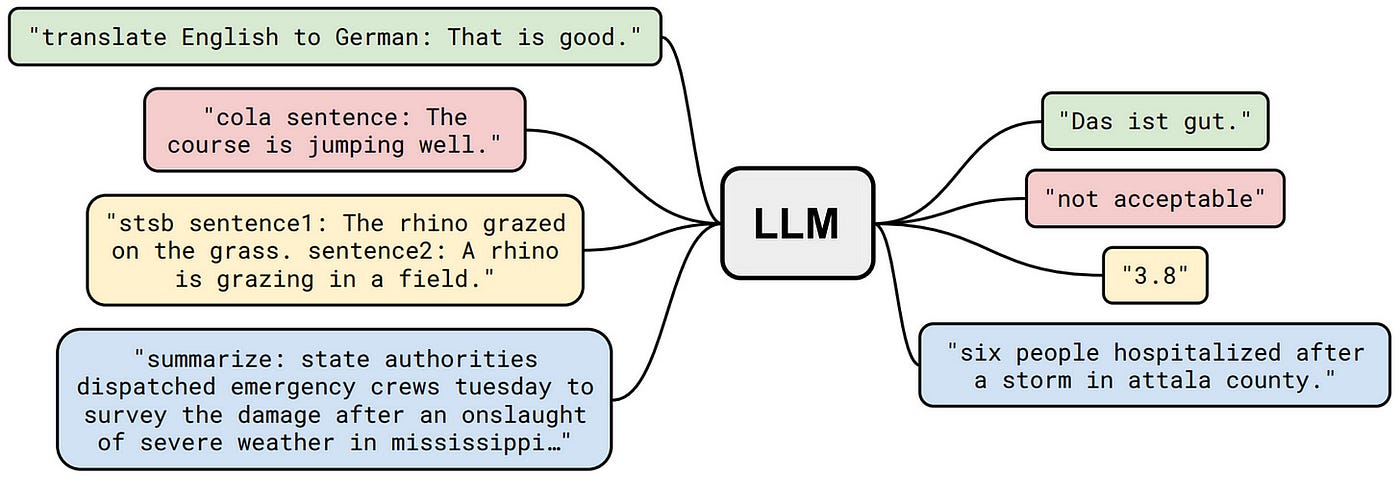

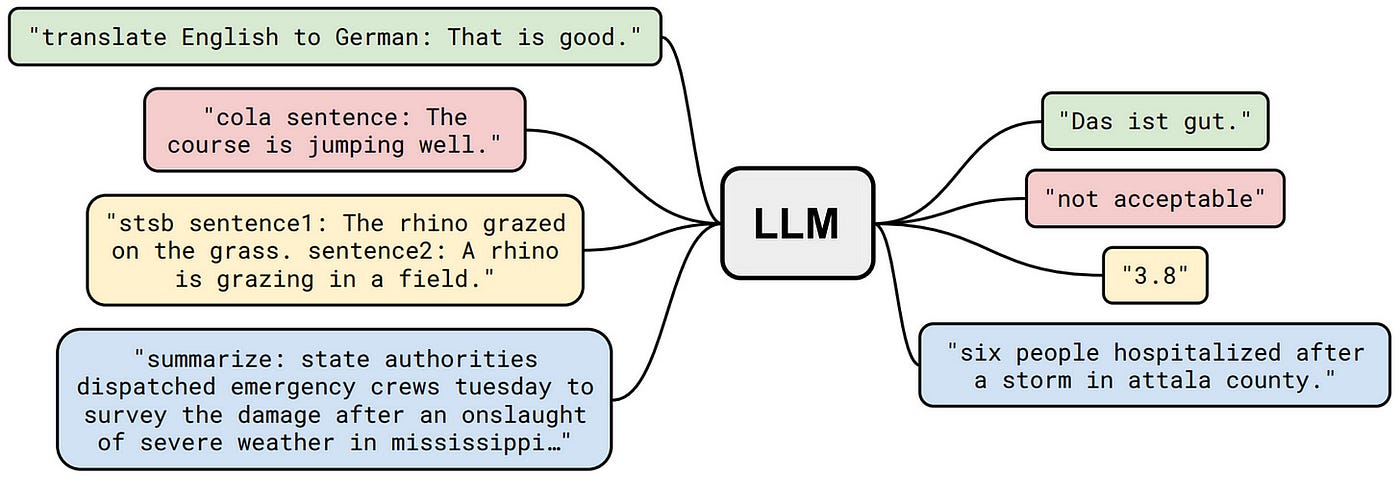

Large Language Models (LLMs) have revolutionized how we interact with AI, possessing the ability to generate human-like text, translate languages, and perform various creative tasks. But how do we get these models to do what we want? That's where LLM prompting comes in. It's the art and science of crafting effective inputs, or "prompts," to guide these powerful models towards a desired output. Essentially, a prompt is a piece of text or instructions that you provide to an LLM, triggering a specific response or action. Think of it as the initial seed that directs the model's vast knowledge and capabilities.

Traditional programming involves writing code in a specific language to instruct a computer to perform a task. It's precise and deterministic; the code dictates exactly what the computer will do. With LLMs, prompting is different. Instead of writing code, you use natural language to instruct the model. This approach is less rigid and more flexible, allowing for a more intuitive way to interact with AI. You're essentially guiding the LLM, not dictating every step. This shift from code to natural language is a fundamental difference between the two approaches.

To fully understand the power of prompting, it's essential to distinguish it from training LLMs. While both are crucial for utilizing these models effectively, they serve different purposes and involve different processes.

LLM training is the process of feeding a large language model with massive amounts of text data, allowing it to learn the patterns, relationships, and nuances of language. This process is computationally intensive and requires vast resources. It's like teaching a student by providing them with a library of books to read. The goal is to equip the LLM with a broad understanding of language and the world, enabling it to perform a variety of tasks.

LLM prompting, on the other hand, is about using natural language to instruct a trained model to perform a specific task. It's akin to asking that well-read student to write an essay on a particular topic. The model uses its pre-existing knowledge and understanding of language to generate a response based on your prompt. Prompting doesn't alter the model's internal parameters; rather, it guides the model's output based on the input it receives.

| Feature | LLM Training | LLM Prompting |

|---|---|---|

| Purpose | To build a broad understanding of language | To guide the model towards a specific task |

| Process | Computationally intensive, requires large datasets | Uses natural language to instruct the model |

| Resource Requirements | High computational resources and time | Relatively low resources |

| Parameter Adjustment | Adjusts the model's internal parameters | Does not change the model's parameters |

| Flexibility | Less flexible, requires retraining for new tasks | More flexible, adaptable to various tasks |

Prompt engineering is the core skill that unlocks the potential of LLMs. It involves crafting prompts that elicit the desired response. Several techniques are available to optimize prompts for different tasks.

Zero-shot prompting involves instructing the LLM to perform a task without providing any examples. The model relies solely on its pre-existing knowledge and understanding to generate the desired output. This technique is useful for straightforward tasks where the model can infer the desired behavior from the instructions alone. For example, you might ask, "Translate this sentence into Spanish." The LLM, without prior examples, can typically perform this task effectively.

Few-shot prompting, in contrast, involves providing the LLM with a few examples of the desired input-output format. This technique helps the model learn in context, guiding it towards the correct response. If zero-shot prompting proves insufficient, switching to few-shot prompting might help. For instance, if you're asking an LLM to categorize text, you might provide examples like:

Chain of Thought (CoT) prompting is a more advanced technique that enhances the reasoning abilities of LLMs. It instructs the model to break down complex tasks into simpler sub-steps, showing its reasoning process step-by-step. This technique is very useful for complex reasoning tasks, such as math problems or logical deductions. For example, instead of directly asking for the answer to a math problem, you might ask the LLM to "Solve this problem step-by-step." This encourages the model to explain its reasoning, leading to more accurate and transparent responses.

Building upon CoT, Tree of Thought (ToT) prompting guides an LLM to explore multiple possible lines of reasoning simultaneously. Unlike CoT, which follows a linear path, ToT allows for branching out and exploring various perspectives or solutions. This method effectively maps out a more comprehensive range of possibilities and contingencies, leading to more creative and diverse problem-solving.

Meta prompting focuses on structuring and guiding LLM responses in a more organized and efficient manner. Instead of relying on detailed examples, meta prompting emphasizes the format and logic of queries. For example, in a math problem, you might outline the steps needed to come up with the right answer, like "Step 1: Define the variables. Step 2: Apply the relevant formula. Step 3: Solve." This approach helps the LLM generalize across different tasks without relying on specific content.

Self-consistency prompting improves the accuracy of chain-of-thought reasoning. Instead of relying on a single, potentially flawed line of logic, this technique generates multiple reasoning paths and then selects the most consistent answer from them. It's particularly effective for tasks involving arithmetic or common sense.

Iterative prompting involves refining your prompts based on the model's responses. You start with a basic prompt, and then, based on the output, you can tweak it further. This is a useful technique when you are not sure about the best way to phrase your prompt.

While most prompting techniques convey what you do want, negative prompting specifies what you don't want. This is more popular in text-to-image models, but it can also be used in LLMs to specify what the model should avoid in its response, such as using contractions or certain phrases.

While prompting is a powerful tool, it's essential to understand how it compares to fine-tuning or retraining a model. Both methods aim to improve the performance of LLMs but in different ways.

Fine-tuning involves adjusting a pre-trained model's parameters on a specialized dataset to enhance its performance in a specific task. This process requires more resources than prompt engineering but can lead to more precise results.

The first step in fine-tuning is selecting and preparing high-quality, relevant data. The data should mirror the tasks or contexts that the model will encounter. This involves cleaning, labeling, and possibly augmenting the data to ensure it's suitable for training.

You can also alter the model’s hyperparameters or incorporate task-specific layers to better suit particular tasks. For example, you can add a specialized output layer for a classification task or fine-tune the learning rate. Techniques like Low-Rank Adaptation (LoRA) may also be used to reduce the number of trainable parameters.

The crux of fine-tuning involves training the model further on the selected dataset. This step requires careful management of learning rates, batch sizes, and other training parameters to avoid overfitting. Techniques like dropout, regularization, and cross-validation can help mitigate this risk.

Prompt engineering, on the other hand, focuses on crafting and refining input prompts to guide the model's output without retraining. It's a more iterative process that involves experimenting with different prompt structures and wordings.

Manual prompt engineering involves crafting prompts by hand to guide the model towards the desired output. It's a creative process that relies on understanding how the model responds to different input types.

Prompt templates use placeholders or variables that can be filled with specific information to generate tailored prompts. This approach allows for dynamic prompt generation based on the task’s context.

Soft prompt engineering involves learning a set of embeddings (soft prompts) that are prepended to the input to steer the model toward the desired output. This method typically requires gradient-based optimization but does not modify the original model parameters.

Choosing between prompt engineering and fine-tuning depends on the specific task, available resources, and desired level of accuracy.

Prompt engineering is ideal when:

Fine-tuning is the preferred choice when:

To get the most out of LLMs, it's crucial to follow best practices for prompt engineering:

The more precise your prompt, the better the LLM can understand your intent. Avoid ambiguity and focus on providing clear instructions and context.

Structure your prompts logically, separating instructions from the context and input. This helps the model process your request more effectively.

Prompt engineering is an iterative process. Be prepared to refine your prompts, try different wordings, and adjust the level of detail to get the best results.

Use prompt templates for common tasks to standardize the prompt structure and ensure consistency.

Experiment with different prompting techniques and test your prompts with different models to assess their robustness.

LLM prompting is an evolving field, with new techniques and best practices emerging constantly.

New trends include more sophisticated methods for chain-of-thought prompting, better ways to incorporate external knowledge, and techniques to make LLMs more robust and reliable.

Prompting will continue to play a crucial role in AI development, serving as the primary interface between humans and LLMs. As models become more complex, effective prompting will become even more critical.

By 2025, we can expect to see more advanced prompting techniques that leverage the model's internal workings to achieve even better results. Techniques like soft prompting and other methods that rely on gradient-based optimization will become more accessible and easier to implement.

Key Takeaways:

— in AI Ethics and Policy

— in Natural Language Processing (NLP)

— in Deep Learning

— in AI Tools and Platforms

— in Natural Language Processing (NLP)