Meet ModernBERT: The Exciting New Replacement for BERT

AI researcher with expertise in deep learning and generative models.

AI researcher with expertise in deep learning and generative models.

Key Takeaways:

ModernBERT is a new encoder-only model developed by Answer.AI and LightOn. This model is a significant upgrade from the original BERT model. It is designed to be faster and more accurate.

ModernBERT has a context length of 8,192 tokens, which is much longer than most encoders. It is also the first encoder-only model to be trained on a large amount of code. This makes it suitable for new applications like large-scale code search and new IDE features.

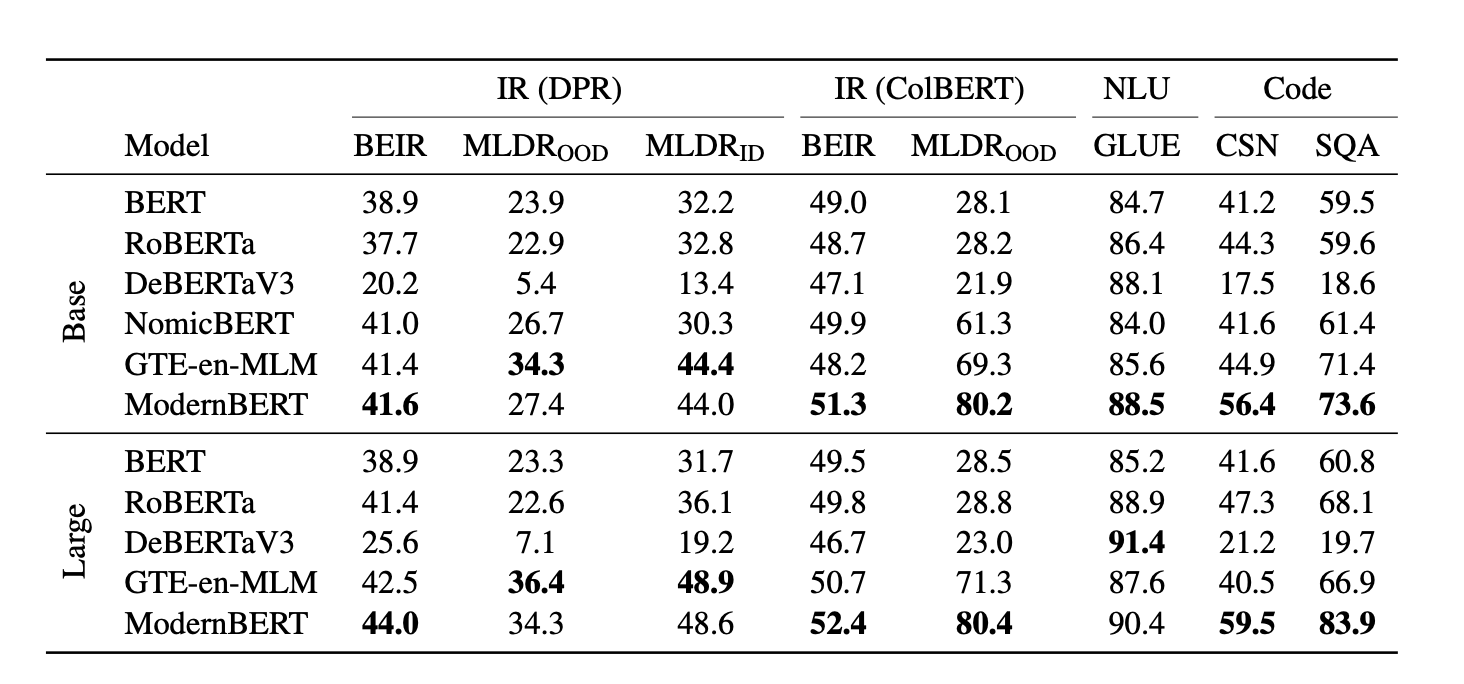

It outperforms older models like DeBERTaV3 in many tasks. ModernBERT is twice as fast as DeBERTa and uses less than 1/5th of the memory. It is also faster than other high-quality models like NomicBERT and GTE-en-MLM.

The model's architecture is based on the Transformer++ design. It includes updates like "rotary positional embeddings" (RoPE) and GeGLU layers. These changes improve the model's efficiency and ability to understand the context.

ModernBERT uses alternating attention, unpadding, and sequence packing to improve processing efficiency. This allows it to handle long input sequences faster than other models. For example, it can process variable-length inputs much faster. It also works well on smaller and cheaper GPUs.

The model is trained on 2 trillion tokens from various sources, including web documents, code, and scientific articles. If you are interested in exploring other models like Meta's Llama 3.3 70B, check out our post on Unlocking the Power of Meta's Llama 3.3 70B: What You Need to Know. This diverse training data helps ModernBERT perform well across different tasks.

ModernBERT is designed for practical use. It can be hosted on clients' infrastructure, ensuring data control. It also integrates well into Retrieval Augmented Generation (RAG) pipelines, similar to how Gemini 2.0 marks a new era for Google AI. This makes it a cost-effective alternative to larger language models like GPT.

ModernBERT's efficiency and performance make it a valuable tool for many applications. Its ability to handle long contexts and code data opens up new possibilities for developers. As AI continues to evolve, models like ModernBERT will play a crucial role in advancing the field.

— in Natural Language Processing (NLP)

— in GenAI

— in Natural Language Processing (NLP)

— in Natural Language Processing (NLP)

— in GenAI