A Simple Guide to Understanding LoRA for Fine-Tuning Large Models

AI researcher with expertise in deep learning and generative models.

AI researcher with expertise in deep learning and generative models.

Low-Rank Adaptation (LoRA) is a cutting-edge technique designed to fine-tune large language models (LLMs) efficiently. Unlike traditional methods that require updating all model parameters, LoRA introduces trainable low-rank matrices into the model architecture. These matrices allow the model to adapt to new tasks with significantly fewer parameters, making the fine-tuning process faster and less resource-intensive.

Introduced by researchers from Microsoft in 2021, LoRA emerged as a solution to the challenges of fine-tuning large models. The method builds on the understanding that many pre-trained models are overparameterized, meaning they have more parameters than necessary to perform a task effectively. By leveraging low-rank updates, LoRA provides an elegant way to modify models without the computational burden associated with full fine-tuning.

As AI applications expand, the need for efficient model adaptation becomes critical. Traditional fine-tuning methods often lead to high computational costs and prolonged training times, making them less feasible for many users. LoRA addresses these issues, enabling researchers and developers to adapt models to specific tasks while maintaining performance and minimizing resource usage.

One of the main advantages of LoRA is its ability to reduce computational costs. By updating only a small subset of parameters—those in the low-rank matrices—LoRA minimizes the overall training load. This allows organizations with limited resources to leverage powerful models without incurring significant expenses.

LoRA's design leads to substantial reductions in memory usage. For example, instead of requiring the entire model's parameters to be stored during fine-tuning, only the low-rank matrices need to be saved. This can save gigabytes of storage space, making it easier to work with multiple models simultaneously.

Training time is another area where LoRA shines. Since fewer parameters are updated, the training process is expedited. This allows practitioners to iterate quickly, making it ideal for environments where speed is essential.

LoRA enhances a model's generalization capabilities. By focusing on specific low-rank adaptations, models can maintain their general knowledge while adapting to new tasks. This balance minimizes the risk of catastrophic forgetting, where the model loses its prior knowledge when fine-tuning for a new task.

The rank (r) of the low-rank matrices is a critical hyperparameter in LoRA. A smaller rank reduces the number of trainable parameters, making the process more efficient. However, an excessively low rank may hinder the model's ability to learn task-specific nuances. It is advisable to experiment with different ranks to find the optimal balance between efficiency and performance.

Low-rank matrices A and B are fundamental to LoRA's architecture. These matrices must be configured correctly to ensure that they effectively approximate the weight changes needed during fine-tuning. Proper initialization—such as using Kaiming initialization for A—can significantly impact performance.

Two crucial hyperparameters in LoRA are alpha (α) and the learning rate (LR). The scaling factor α controls the influence of the low-rank updates, while the learning rate dictates how quickly the model adapts. Setting α to twice the rank value is a common heuristic that often yields good results.

To prevent overfitting during fine-tuning, it is essential to incorporate dropout and other regularization techniques. Applying dropout to the low-rank matrices helps the model generalize better, especially when training data is limited.

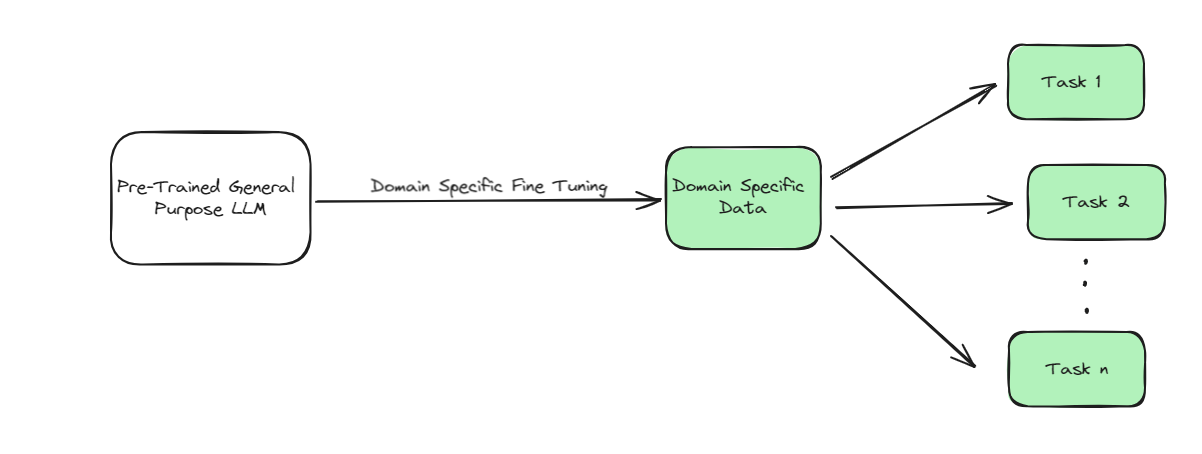

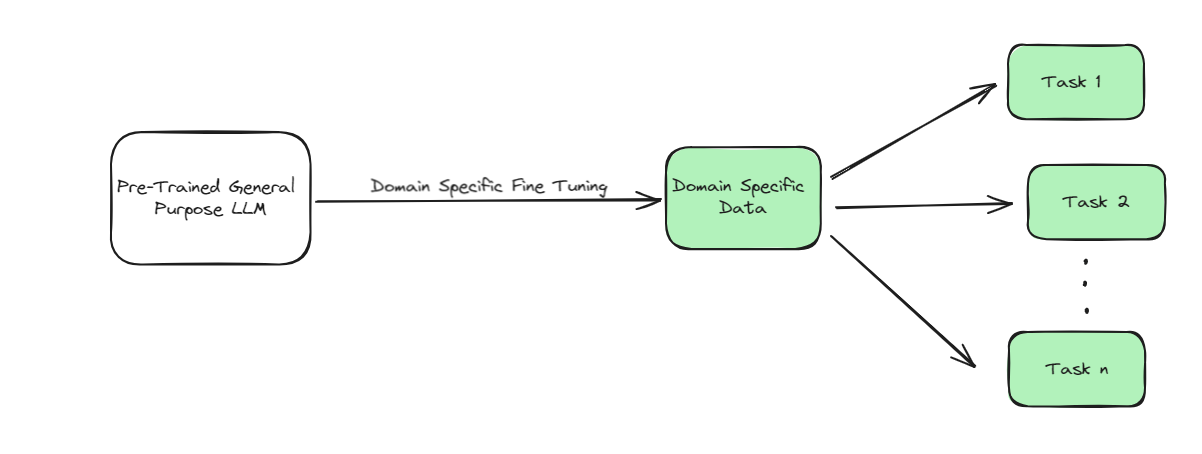

Traditional fine-tuning involves updating all parameters in a model when adapting it to new tasks. This often leads to significant resource consumption and longer training times. Techniques such as full fine-tuning, adapter-based fine-tuning, and layer-wise tuning are commonly employed.

When comparing LoRA to traditional methods using performance metrics, studies show that LoRA can achieve comparable—or even superior—results with far fewer trainable parameters. This efficiency is particularly evident in large models, where full parameter updates would be prohibitively expensive.

LoRA excels in scenarios where resources are constrained or when multiple models need to be fine-tuned for various tasks. Its ability to maintain a small memory footprint while offering robust performance makes it a preferred choice for many practitioners.

One notable application of LoRA is in fine-tuning BERT models for specific NLP tasks. By integrating low-rank matrices into the architecture, researchers have successfully adapted BERT for tasks such as sentiment analysis and named entity recognition with minimal additional computational overhead.

LoRA has also been applied to text generation tasks, where models are fine-tuned to produce coherent and contextually relevant outputs. By using LoRA, developers can adjust the model's behavior while preserving its general knowledge, leading to improved results in creative writing and dialogue generation.

In the realm of computer vision, LoRA has been successfully implemented in image classification tasks. By adapting models like ResNet with low-rank updates, practitioners can enhance performance on specific datasets without needing to retrain the entire model from scratch.

LoRA stands out as an innovative solution for efficiently fine-tuning large language models. Its ability to reduce computational costs, lower memory requirements, and speed up training times makes it an attractive option for researchers and developers alike.

As AI continues to evolve, techniques like LoRA will play a crucial role in the ongoing development of adaptable models. The efficient fine-tuning of large models will be essential for expanding their applications and ensuring that they remain accessible to a wide range of users.

In summary, LoRA represents a significant advancement in the field of AI fine-tuning. By allowing for efficient model adaptation with minimal resource usage, it opens up new avenues for applying large models across various domains.

Key Takeaways:

Learn more about the implementation of LoRA in deep learning projects through practical applications and case studies. For further exploration, consider reading about Discovering the Llama Large Language Model Family and Understanding OpenAI's Reinforcement Fine-Tuning.

— in GenAI

— in Natural Language Processing (NLP)

— in AI Ethics and Policy

— in GenAI

— in Natural Language Processing (NLP)