Understanding Neural Networks: A Simple Breakdown of How They Work

AI researcher with expertise in deep learning and generative models.

AI researcher with expertise in deep learning and generative models.

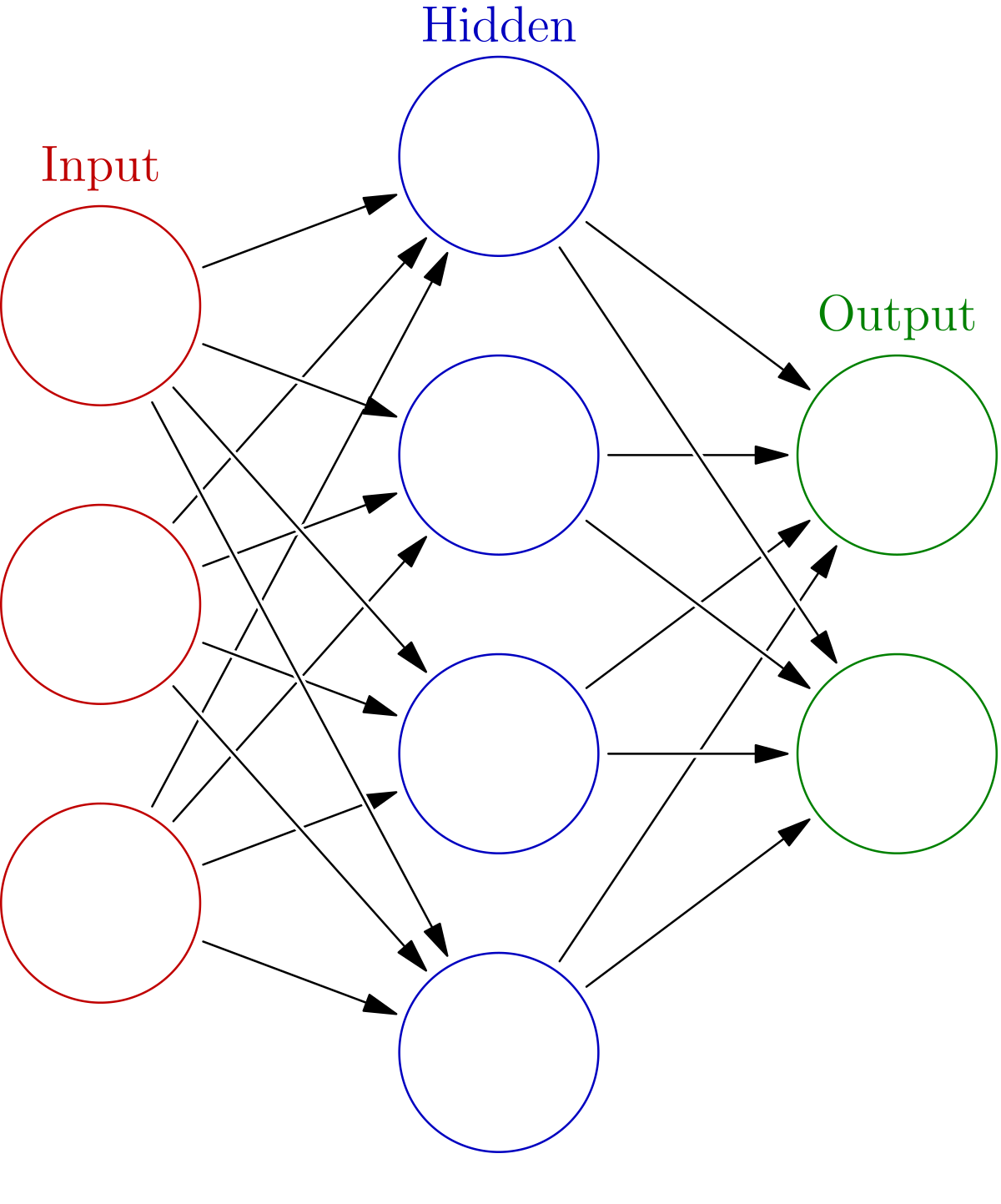

Neural networks are computational models inspired by the human brain’s architecture. They consist of interconnected nodes, or neurons, that work together to process and analyze complex data inputs. At their core, these networks excel at pattern recognition, making them essential in various artificial intelligence (AI) applications.

The design of neural networks is loosely based on how biological neurons communicate in the brain. Just as neurons transmit signals through synapses, artificial neurons pass information using weighted connections. This structure enables neural networks to learn from experience, strengthening the connections as they are exposed to more data, similar to how our brains form stronger neural pathways with repeated use.

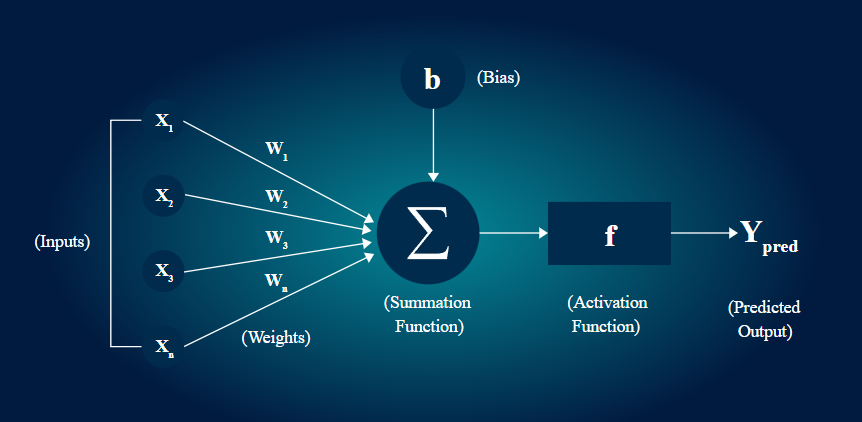

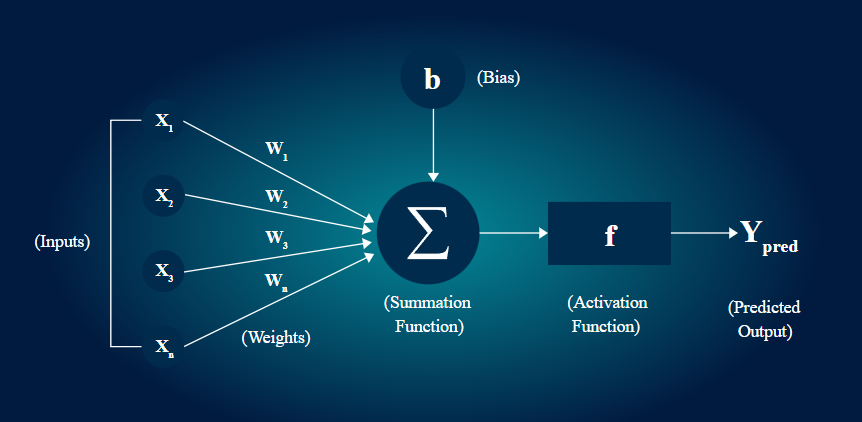

Each neuron in a neural network performs simple computations based on its inputs. These inputs are weighted, meaning that the strength of the signal can vary. A bias is also added to the weighted sum, allowing the model to shift the output function for better performance. This combination enables the network to learn complex relationships within the data.

Neural networks are organized into layers, each playing a crucial role in data processing:

Data flows through the network in a process known as forward propagation. Each neuron receives input, processes it by applying weights and biases, and then passes the output to the next layer. This process continues until the output layer is reached.

Activation functions determine whether a neuron should be activated based on its input. Common activation functions include:

Training a neural network involves adjusting the weights and biases based on the data it processes. This is typically done through two main techniques:

Neural networks use various learning algorithms to optimize their performance. The most common method is gradient descent, which adjusts weights to minimize the error between predicted and actual outputs.

Neural networks are widely used across various industries and applications, showcasing their versatility:

Neural networks excel in recognizing patterns in images and speech. For instance, convolutional neural networks (CNNs) are often employed in computer vision tasks, such as identifying objects in pictures and facial recognition.

In natural language processing (NLP), recurrent neural networks (RNNs) are commonly used for tasks like language translation and sentiment analysis.

Neural networks help businesses predict trends by analyzing historical data, making them invaluable in finance and marketing.

In healthcare, neural networks are utilized for diagnosing diseases from medical images, improving patient outcomes through early detection.

Neural networks assist in financial modeling by predicting stock prices and identifying trading opportunities based on historical data patterns.

This is the simplest type of neural network, where data flows in one direction—from input to output.

CNNs are designed for processing structured grid data, such as images. They are especially effective in recognizing spatial hierarchies.

RNNs are suitable for sequential data tasks, such as time series prediction and language modeling, as they maintain a memory of previous inputs.

GANs consist of two networks—the generator and the discriminator—that work against each other to generate new data instances, making them powerful for content creation.

Neural networks differ from traditional machine learning models in their ability to learn features directly from data rather than relying on handcrafted features.

While neural networks can appear opaque in their decision-making, various techniques are being developed to enhance their interpretability, such as explainable AI (XAI) methods.

Despite their capabilities, neural networks can perpetuate biases present in training data. Ethical considerations in their deployment are essential to ensure fairness and accountability.

The field of neural networks is rapidly evolving, with innovations in architecture and training techniques enhancing their capabilities.

Neural networks are at the forefront of AI advancements, pushing boundaries in areas like autonomous systems and intelligent decision-making.

As neural networks become more integral to AI, addressing challenges such as interpretability, bias, and data privacy will be crucial for their responsible development.

Neural networks are powerful tools that have revolutionized AI technologies, enabling advancements across various fields.

As the landscape of AI continues to evolve, exploring the depths of neural networks will open new doors for innovation and understanding.

Key Takeaways:

Feel free to dive deeper into other related topics such as How AI Models Spot Fraud in Transactions or Step-by-Step Guide to Building a CNN for Cassava Leaf Disease Detection for more insights into the capabilities of AI and neural networks.

— in AI in Business

— in GenAI

— in GenAI

— in AI Tools and Platforms

— in GenAI