Understanding Satellite Image Segmentation and Its Real-World Uses

AI researcher with expertise in deep learning and generative models.

AI researcher with expertise in deep learning and generative models.

— in Data Science Basics

— in Sustainability and AI

— in Computer Vision

— in Deep Learning

— in Computer Vision

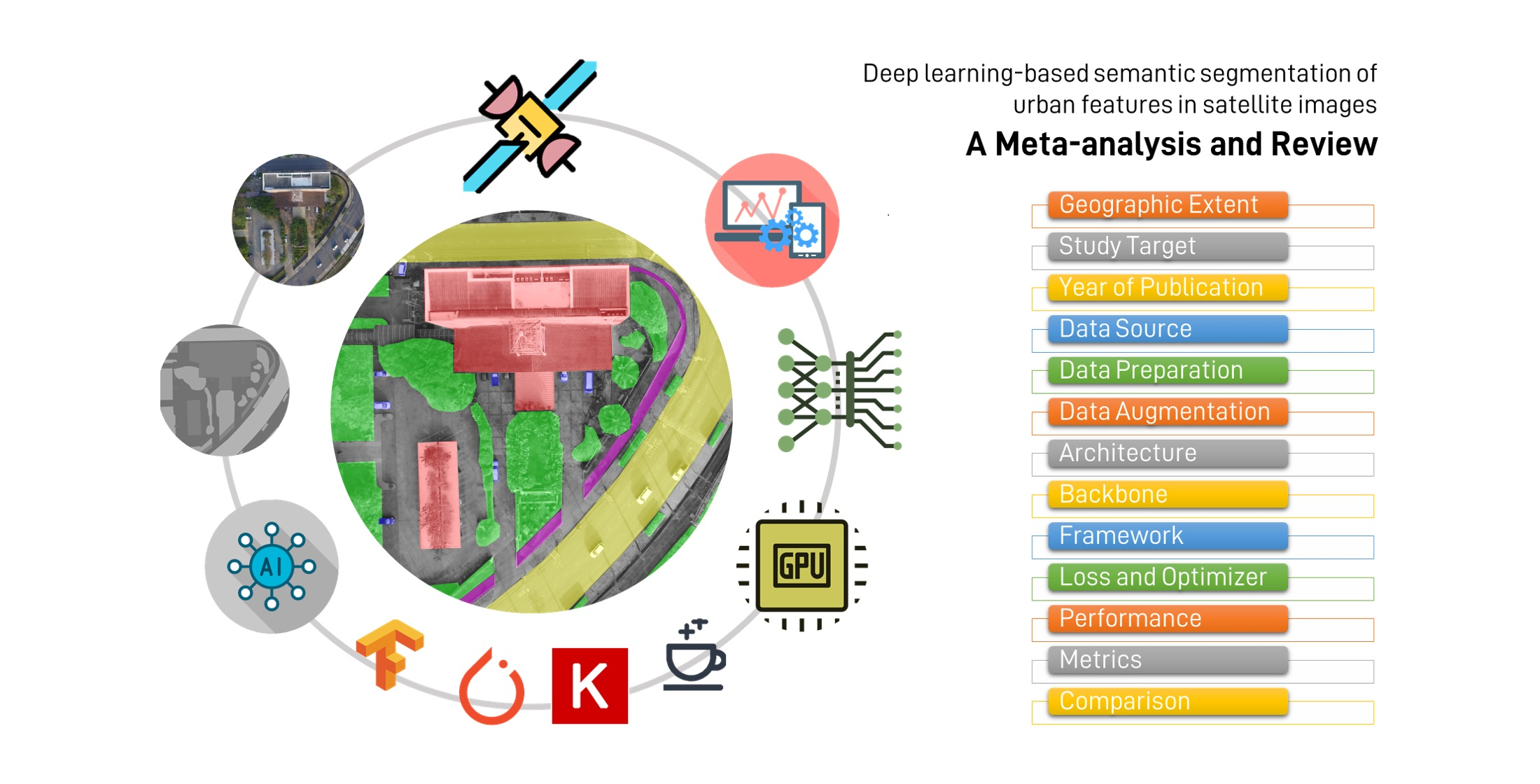

Satellite image segmentation is a process that involves dividing a satellite image into multiple segments or regions. Each segment represents a specific object or area of interest, such as buildings, roads, or vegetation.

This technique assigns a label to every pixel in the image. It helps in identifying regions and the boundaries between them.

Image segmentation is crucial in remote sensing for several reasons. It allows for the detailed analysis of land use and land cover.

It also aids in monitoring changes over time, such as urban expansion or deforestation. This information is vital for urban planning, environmental monitoring, and disaster management. Real-Time Deforestation Tracking: How AI is Changing the Game.

There are three main types of image segmentation.

Semantic segmentation involves classifying each pixel in an image into a specific class. For example, all pixels representing buildings are labeled as "building," while all pixels representing roads are labeled as "road."

This technique provides a broad understanding of the different classes present in an image. It is widely used in various applications such as autonomous driving, medical image analysis, and remote sensing.

Instance segmentation goes a step further than semantic segmentation. It distinguishes between different instances of the same class.

For example, it can differentiate between individual buildings in an image. This allows for a more detailed analysis of the objects present in the scene.

Panoptic segmentation combines both semantic and instance segmentation. It assigns a class label to each pixel.

It also differentiates between different instances of the same class. This provides a comprehensive understanding of the scene.

CNNs are a type of deep learning model. They are particularly effective for image analysis.

They can automatically learn hierarchical features from images. This makes them suitable for segmentation tasks.

FCNs are an extension of CNNs. They are specifically designed for semantic segmentation.

They replace fully connected layers with convolutional layers. This allows them to produce pixel-wise segmentation maps.

U-Net is a popular architecture for semantic segmentation. It consists of a contracting path to capture context and a symmetric expanding path for precise localization.

Variants of U-Net, such as U-Net++ and RSUnet, have been developed to improve performance. They are also used in Understanding Satellite Image Classification and Its Benefits.

Satellite image segmentation can be used to identify different crop types. It helps to monitor their growth stages.

This information is crucial for optimizing agricultural practices. It helps to improve crop yields.

By providing detailed information about crop health and soil conditions. Segmentation supports precision agriculture.

This includes targeted irrigation and fertilization. It helps to reduce resource waste and environmental impact.

In urban planning, satellite image segmentation helps in mapping and monitoring infrastructure. This includes roads, buildings, and other urban features.

This data is essential for planning new developments. It helps to manage existing infrastructure.

Segmentation is used to monitor environmental changes. It detects natural disasters like floods or landslides.

This information is crucial for disaster response and mitigation efforts. It's also useful for assessing the impact of urbanization on the environment.

Satellite image segmentation is used to classify different land use types. These include forests, agricultural land, and urban areas.

This information is vital for land management. It can help with conservation efforts.

By comparing segmented images from different time periods. Changes in urban areas can be detected.

This helps in understanding urban growth patterns. It helps to plan for future development. It is also used in How Satellite Image Classification is Revolutionizing Military Strategies.

High-quality satellite imagery is not always available for all regions. Cloud cover and atmospheric conditions can affect image quality.

This can impact the accuracy of segmentation. Data quality can vary, leading to inconsistencies in analysis.

Advanced segmentation techniques, especially those based on deep learning. They require significant computational resources.

This can be a limitation for organizations with limited computing power. Training complex models can be time-consuming.

Integrating data from multiple sensors, such as SAR and LiDAR, can enhance segmentation accuracy. Multi-source data fusion provides a more comprehensive view of the Earth's surface.

Combining different data types can improve the robustness of segmentation. This is according to "Revolutionizing urban mapping: deep learning and data fusion strategies for accurate building footprint segmentation".

Research is ongoing to develop more efficient and accurate deep-learning models. Innovations like the transformer architecture, originally developed for natural language processing, are being adapted for image segmentation tasks. Understanding Transformers Architecture: A Beginner's Simple Guide.

These advancements can lead to better performance and reduced computational demands. This is highlighted in the article "A Beginner's Guide To Segmentation In Satellite Images."

Satellite image segmentation is a powerful technique for analyzing Earth observation data. It has wide-ranging applications in agriculture, urban planning, and environmental monitoring.

While challenges exist, ongoing advancements in machine learning and data integration promise to enhance its capabilities further. It continues to evolve as a critical tool for understanding and managing our planet.

Improved segmentation techniques can lead to more accurate and detailed insights. This can support better decision-making in various fields.

Enhanced capabilities can contribute to sustainable development. It can help to improve disaster response efforts.

Key Takeaways: